- 2020 한국인공지능학회 동계강좌 정리 – 1. 고려대 주재걸 교수님, Human in the loop

- 2020 한국인공지능학회 동계강좌 정리 – 2. 서울대 김건희 교수님, Pretrained Language Model

- 2020 한국인공지능학회 동계강좌 정리 – 3. KAIST 문일철 교수님, Explicit Deep Generative Model

- 2020 한국인공지능학회 동계강좌 정리 – 4. KAIST 신진우 교수님, Adversarial Robustness of DNN

- 2020 한국인공지능학회 동계강좌 정리 – 5. AITrics 이주호 박사님, Set-input Neural Networks and Amortized Clustering

- 2020 한국인공지능학회 동계강좌 정리 – 6. KAIST 양은호 교수님, Deep Generative Models

- 2020 한국인공지능학회 동계강좌 정리 – 7. AITrics 김세훈 박사님, Meta Learning for Few-shot Classification

- 2020 한국인공지능학회 동계강좌 정리 – 8. UNIST 임성빈 교수님, Automated Machine Learning for Visual Domain

- 2020 한국인공지능학회 동계강좌 정리 – 9. 연세대 황승원 교수님, Knowledge in Neural NLP

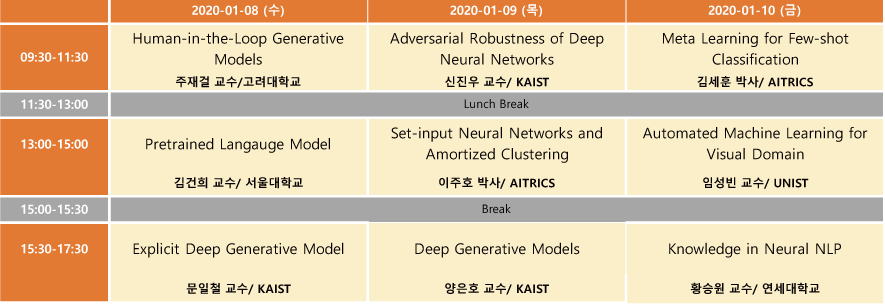

2020 인공지능학회 동계강좌를 신청하여 2020.1.8 ~ 1.10 3일 동안 다녀왔다. 총 9분의 연사가 나오셨는데, 프로그램 일정은 다음과 같다.

전체를 묶어서 하나의 포스트로 작성하려고 했는데, 주제마다 내용이 꽤 될거 같아, 한 강좌씩 시리즈로 묶어서 작성하게 되었다. 첫 번째 포스트에서는 고려대학교 주재걸 교수님의 “Human in the loop” 강연 내용을 다룬다.

- Recognition(인지) vs Generation (생성)

- Recognition : compresses a large number of input values into a small number of output values.

- Generation : expands a small number of input values into a large number of output values.

- Translation : transforms a large number of input information into another large number of output values.

- Conditional Generation : An additional input is given, which steers the generation processes in a user-driven manner.

- Applications of Generative Models

- Realistic samples for artwork, super-resolution, colorization, In-Painting, etc

- GANs

- Terminology

- Generative : It is a model for generation

- Adversarial : Improves the generation quality via adversarial training

- Networks : The model is formed as neural networks.

- Current Status of GAN :

- PGGAN : Karras et al. Progressive Growing of GANs for Improved Quality, Stability, and Variation, ICLR’18

- StyleGAN : Karras et al. A Style-Based Generator Architecture for Generative Adversarial Networks, CVPR’ 19

- Several type of GANs

- DCGAN : No pooling layer (Instead strided convolution), Use batch normalization, Adam optimizer (lr=0.0002, beta1=0.5, beta2=0.999)

- pix2pix : Paired Image-to-Image Translation

- CycleGAN : Unpaired Image-to-Image Translation

- CGAN (Conditional GAN) : Mirza & Osindero, Conditional Generative Adversarial Nets, 2014

- ACGAN (Auxiliary Classifier) : Odena et al, Conditional Image Synthesis With Auxiliary Classifier GANs, 2016

- Improves the training of GANs using class labels

- STARGAN : Choi et al, StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation, CVPR 2018

- Multi-Domain Image-to-Image translation

- Terminology

- Motivations for Human-in-the-Loop Approach

- User intent are often too complex to describe as a simple categorical variable.

- Flexible, sophisticated forms of user inputs are necessary

- Some among them may not be satisfactory to users nor aligned with user intent.

- Users should be able to partially edit the output in an interactive manner

- User intent are often too complex to describe as a simple categorical variable.

- User Inputs in Generative Models

- Global (male of female) vs. Local (strokes and scribbles)

- Reference-based vs. non-reference-based

- Strokes and scribbles

- Positive vs. Negative clicks (segmentation)

- Particular colors (colorization)

- https://paintschainer.preferred.tech/index_en.html

- Reference image

- User’s own vs. one among a pre-given set

- Concatenation-based

- STARGAN : Choi et al, StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation, CVPR 2018

- DRIT : Lee et al, Diverse Image-to-Image Translation via Disentangled Representations, ECCV 2018

- Normalization-based

- MUNIT : Huang et al, Multimodal Unsupervised Image-to-Image Translation, ECCV 2018

- GDWCT : Cho et al, Image-to-Image Translation via Group-wise Deep Whitening-and-Coloring Transformation, CVPR 2019

- SPADE : Park et al, Semantic Image Synthesis with Spatially-Adaptive Normalization, CVPR 2019

- GauGAN : Interactive Tool of SPADE, http://nvidia-research-mingyuliu.com/gaugan/

- GANPaint

- A user edits a generated image or a photograph with high-level concepts rather than pixel colors

- https://www.youtube.com/watch?v=yVCgUYe4JTM&feature=youtu.be

- Bau et al, GAN Dissection: Visualizing and Understanding Generative Adversarial Networks, ICLR 2019

- http://gandissect.res.ibm.com/ganpaint.html

- https://github.com/CSAILVision/gandissect

- Human-machine interaction is almost real-time

- Semantic Photo Manipulation

- Bau et al, Semantic Photo Manipulation with a Generative Image Prior, SIGGRAPH 2019

- https://www.youtube.com/watch?v=q1K4QWrbCRM&feature=youtu.be

- Interactive Colorization

- Zhang et al, Real-Time User-Guided Image Colorization with Learned Deep Priors, SIGGRAPH 2017

- Support both local and global hints

- Global hints can incorporate a particular characteristic of color histogram of a given reference image

- Concatenation-based conditioning

- Sangkloy et al, Scribbler: Controlling Deep Image Synthesis with Sketch and Color, CVPR 2017

- Interactive Colorization via Sketch and Color Strokes

- Simulating User’s Sketch Inputs

- Zhang et al, Deep Exemplar-based Video Colorization, CVPR 2019

- Sun et al, Adversarial Colorization Of Icons Based On Structure And Color Conditions, ACM MM 2019

- Lee et al, Reference-Based Sketch Image Colorization using Augmented Self Exemplar and Dense Semantic Correspondence, under review

- Bahng et al, Coloring with Words: Guiding Image Colorization Through Text-based Palette Generation, ECCV 2018

- Zhang et al, Real-Time User-Guided Image Colorization with Learned Deep Priors, SIGGRAPH 2017

- Interactive Segmentation

- Acuna et al, Efficient Interactive Annotation of Segmentation Datasets with Polygon-RNN++, CVPR 2018

- Ling et al, Fast Interactive Object Annotation with Curve-GCN, CVPR 2019

- Wang et al, Object Instance Annotation with Deep Extreme Level Set Evolution, CVPR 2019

- Future Research Directions

- Support for real-time, multiple iterative interactions

- Reflecting higher-order user intent in multiple sequential interactions

- Revealing inner-workings and interaction handle

- explicitly using attention module

- Better simulating user inputs in the training stage

- Incorporating data visualization and advanced user interfaces

- Leveraging hard rule-based approaches

- Incorporating users’ implicit feedback and online learning

- Useful Links

- 2019 ICML Workshop on Human in the Loop Learning (HILL)

- 2020 IUI Workshop on Human-AI Co-Creation with Generative Models

- Key researchers

- David Bau : https://people.csail.mit.edu/davidbau/home/

- Sanja Fidler : https://www.cs.utoronto.ca/~fidler/

- Richard Zhang : https://richzhang.github.io/

- Jun-Yan Zhu : https://people.csail.mit.edu/junyanz/

- Support for real-time, multiple iterative interactions